@roger

Just finished working on a demo based on yours and the upload part works perfect!

I’m facing now another trouble tho.

- When getting the job result I need to put it on a timeout otherwise it won’t find the file.

- Then, I want to update a record (named Location Record) to place the image I just uploaded.

I’m doing that on the function updateLocationRecord(), which is throwing me the error below.

This function was working fine in the other version. So maybe it might be when uploading, the jobResult is still to run so, there’s no image to add.

The Error

Uncaught (in promise) Error: PUT https://site-api.datocms.com/items/AgxGiQhTSFOQxH1hZgIajw: 422 Unprocessable Entity

[

{

"id": "a51de5",

"type": "api_error",

"attributes": {

"code": "INVALID_FIELD",

"details": {

"field": "images",

"field_id": "kGKs1SU1Qn6kSM5ORyEWoA",

"field_label": "Images",

"field_type": "gallery",

"errors": [

"Upload ID is invalid"

],

"code": "INVALID_FORMAT",

"message": "Value not acceptable for this field type",

"failing_value": [

{

"alt": null,

"title": null,

"custom_data": {},

"focal_point": null,

"upload_id": "c867cb3c973d8b74e61e6ed8"

}

]

}

}

}

]

Location Record Function

export async function updateLocationRecord(array, id, locationId) {

//i'm using an array to later check if there's images already there

const updateRecord = {

alt: null,

title: null,

custom_data: {},

focal_point: null,

upload_id: id,

};

array.push(updateRecord);

return await client.items.update(locationId, {

images: array,

});

}

The whole submit function

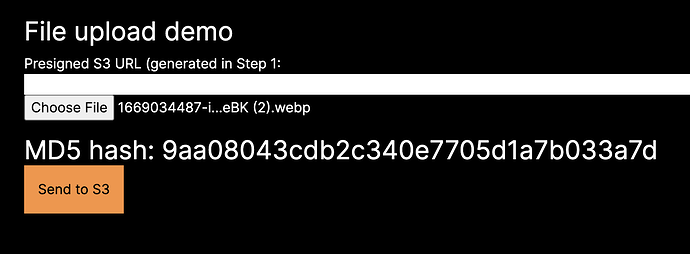

onDrop: async (acceptedFiles) => {

setIsLoading(true);

let imagesArray: any = [];

for (let file of acceptedFiles) {

const s3url = await getPermissionData(file.name);

//works great!

const uploadFile = await fetch(s3url.data.attributes.url, {

body: file,

method: "PUT",

headers: {

"Content-Type": file.type,

},

});

const getFileData = await uploadFileData(s3url.data.id);

//had to do this to get the job result

setTimeout(async () => {

const jobResult = await getJobResult(getFileData.data.id);

console.log("jobResult: ", jobResult);

}, 5000);

//here i want to update the record where the image need to be used

await updateLocationRecord(

imagesArray,

getFileData.data.id,

locationId,

);

//and then publish the record

await publishRecord(locationId);

setIsLoading(false);

}

},

yeah, we went through this route because we needed a feature we requested here but it wasn’t available. Maybe one day it will

Thanks!