Hi everyone,

Longtime lurker, so I really appreciate Dato and this supportive community.

Hoping to get some help in the right direction to set up a data / (E)TL workflow from our Google Sheets “database” to DatoCMS.

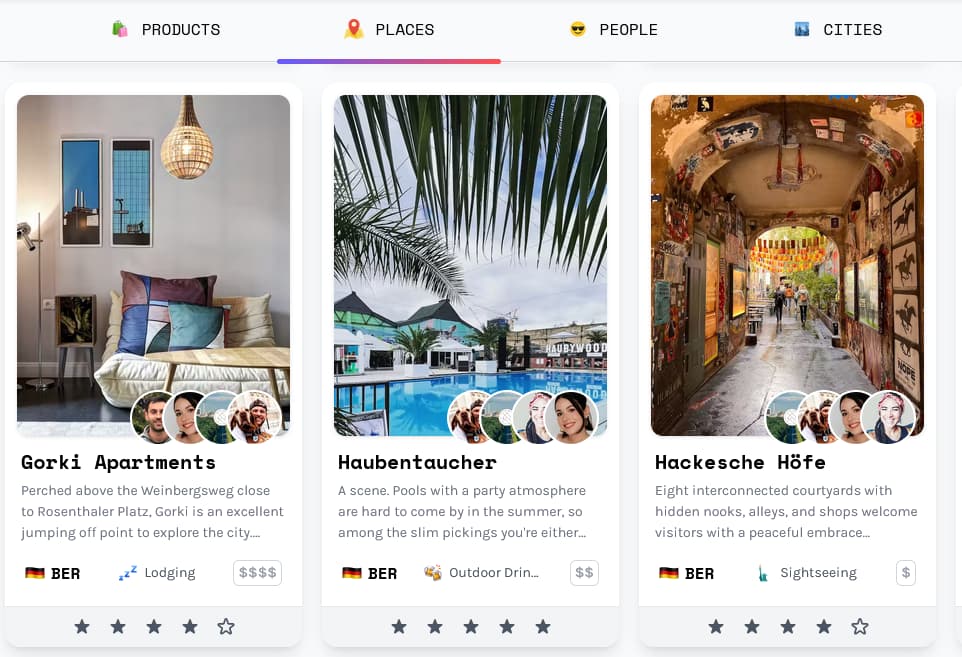

To give you a brief example, our website is connected to DatoCMS, fetching the data and list the datasets as content like this:

A quick overview of our current process and what we want to achieve in the future with DatoCMS:

Current process

-

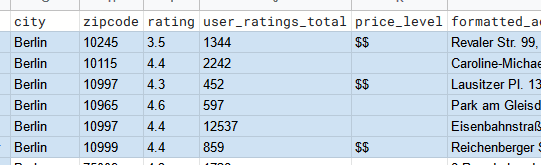

Data collecting and enrichment in Google Sheets

-

Collecting data manually

-

Each row represents an entry and dataset (e.g. a Place or Product on the website)

-

Columns consist of specific, mostly static data

-

Transfer from Google Sheets to DatoCMS

-

Manually going through each row in Google Sheets and look up (specific entry in a column) whether it is a dataset ready to be published on the website

-

If ready then go to DatoCMS backend, create a new Place or Product and copy paste all the data from Google Sheets into the customized user form of the specific collection

-

Upload pictures to the Place/Product manually as well

Desired process

-

Data collecting and enrichment in Google Sheets will be highly automated through API services and different approaches. This task is working well.

-

Transfer from Google Sheets to DatoCMS

-

Identifier column creating, updating, or delete corresponding data in DatoCMS

Current thoughts and developed approach

Our ETL (Extract-Transform-Load) process needs to be way more automated. The “E” part was already taken care of and goes live soon.

What we’re struggling with is the “TL” part, or “Desired process” step 2.

- What is the best approach to exporting data from Google Sheets while keeping it as our source of data?

- What tools could help?

Creation of new data

I developed some data creation and update scripts in node.js locally with VS Code - which worked ok.

I also developed a script which connects to our Google Sheet and retrieves all data of specific worksheets and stores them as an array or JSON. This way we can work with the data easily and are able to identify which datasets can be pushed/created to/in DatoCMS and which not.

I intend to use a primary key in our Google Sheet which we also want to push to DatoCMS, this way we would be able to identify whether the record was already pushed or not. This field would be set to “no duplicates allowed” in the DatoCMS model.

I think after the creation process is done, the best thing to do is to retrieve the internal ID from datoCMS of this record and insert it back into Google Sheets. This way I’d be able to identify the recordset easily for later updates and changes, correct?

How do I retrieve the data (record ID) after creation? Should I use our own primary key from Google Sheets and search all records to it and get back the DatoCMS record ID, is this the best approach?

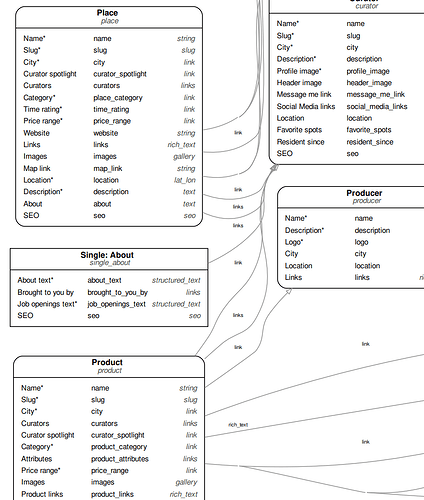

What I am also struggling with is my linked data management. Here is a snippet of our entity relationship diagram (ERD), extracted from DatoCMS.

Let’s assume I want to create data (a new [Place] - see box in the upper left corner of the ERD).

I cannot just use the data as it is in our Google Sheet, correct?

Many of the attributes in this small extract of the Google Sheets are linked fields in our DatoCMS model schema. Of course this is necessary, because the model should be redundancy free (as all relational databases). But how do I solve this issue when uploading data to DatoCMS?

What is the best approach to do so? I came up with 2 ideas, but I’m not very happy with them - but also have no expertise in doing better.

1. idea: While starting the whole uploading process the first step would be to retrieve all existing tables for the linked fields and store them as arrays/temporary tables etc.

While looping through each dataset which will be pushed to DatoCMS I replace every value which is a linked field with the primary key related to it.

This means that the value MUST be correctly spelled, otherwise I’d face issues while uploading.

2. idea: fetch the tables for the linked values and paste them in Google Sheets from time to time. Use them as kind of a data validation list while performing the data entries maybe? I still didn’t figure that out exactly. But this approach is different from doing it on the fly while uploading. Maybe it’s also less prone to errors at the end.

The next question in regards to all the creation/update/deletion processes is how to use them and where to store those scripts.

I came up with an idea but also here I am not familiar with good practices.

My idea is to create a node.js script for each of the processes and store them as cron jobs on an external host. The master data admin would manually trigger them as desired. When triggered a connection to the Google Sheet will be established and the show goes on.

As I already figured out there is no way to integrate all necessary node.js libraries in Google Apps Script, correct? If yes I would be able to create a custom ribbon in Google Sheets and trigger the functions from there directly.

What is the best approach to do so? How do people/companies handle this kind of task?

Appreciate any input. Bit lost!